HuggingFace Agents, Spacy LLMs, Palm2 and more

Welcome to The Token!

Welcome to the second edition of our newsletter The Token. The pace of AI developments shows no sign of slowing, but we’ve got your back!

In this episode we take a brief look at Agents from Hugging Face, and StarCoder their ethically produced coding model. We also talk about developments from explosion.ai and founder Matt Honibal’s take on how Large Language Models (LLMs) will fit into the existing ecosystem of tools. Finally we summarise some of the relevant bits from Google I/O.

As ever, let us know what you think, and if you find yourself in need of help with an NLP problem, drop us an email at hi@mantisnlp.com.

🔨 Hugging Face Agents 😎

Hugging Face has introduced Agents into transformers. Agents enable Large Language Models (LLMs) to plan before answering a prompt, as well to use external tools. In the case of the Hugging Face Agents, these tools can be any model from the Hugging Face hub. This supercharges the ability of an LLM to perform machine learning based tasks.

For example, asking the agent to summarise the content of our website, it breaks down the task into:

download the content of the website

summarise the content using a summarisation model

Tools are mostly based on existing models in the hub, but the framework is quite extensible so you can easily build your own tools. Agents also support both OpenAI models as well as open source variants. Agents will definitely accelerate 🚀 prototyping and might enable use cases where it is hard to plan in advance, like customer support.

🔗 Read more in the documentation https://huggingface.co/docs/transformers/transformers_agents

🧪 StarCoder 💫

Hugging Face open sourced StarCoder 💫 which is a 15B Large Language Model (LLM) trained to produce code in more than 80 programming languages. StarCoder is the best open source LLM for generating code and it is close to the equivalent sized Codex model from OpenAI. Codex is the series of models that power Github Copilot.

Even more importantly, StarCoder is completely open which means you can use it to build a custom model for your product or company that works best for your use case. StarCoder was trained using data with appropriate licenses, and Hugging Face also gave the opportunity to opt out 👏 They also added a step to remove personal identifiable information before training.

The open nature and ethical development of StarCoder opens the door to using state of the art code completion tools in companies that did not want that data shared with OpenAI or others. Read more in the paper https://arxiv.org/pdf/2305.06161.pdf

🔨 Spacy LLM 🦙

SpaCy added support for Large Language Models (LLMs) ✨ You can now add components powered by OpenAI models or open source variants like Dolly 🐑 This makes it very easy to combine the powerful and performant components of SpaCy with components powered by LLMs to help in prototyping and in cases where you do not have enough data collected.

For example you can combine the pre-built NER model of SpaCy that extracts many useful entities already with an LLM component for all the entities that are custom to your application. This can help you build a working a solution in a matter of hours not days. It can also help you handle new use cases where the entities of interest might be dynamically set by the user, i.e. where you have a cold start problem.

🔗 Here is the package https://github.com/explosion/spacy-llm

🖱 Google I/O

Google held its annual I/O conference last week. Unsurprisingly there were a couple of AI announcements during the conference. The most important announcement was the development of Palm2, their GPT4 competitor, which will be available through an API soon. From the technical report associated with the release the model seems on par with GPT4 but there were not many direct comparisons.

Google also made Bard, its ChatGPT competitor available to 180 countries (interestingly not the EU though). In many current comparisons of Bard, it looks as capable as ChatGPT but its killer feature in our opinion is that it is connected to the internet. This makes it much more capable to answer recent questions like "Who won Eurovision?". It is also extremely fast in doing so compared to ChatGPT with browsing.

🔗 Here is the technical report of Palm2 https://ai.google/static/documents/palm2techreport.pdf if you want to read it and here is a link to the keynote

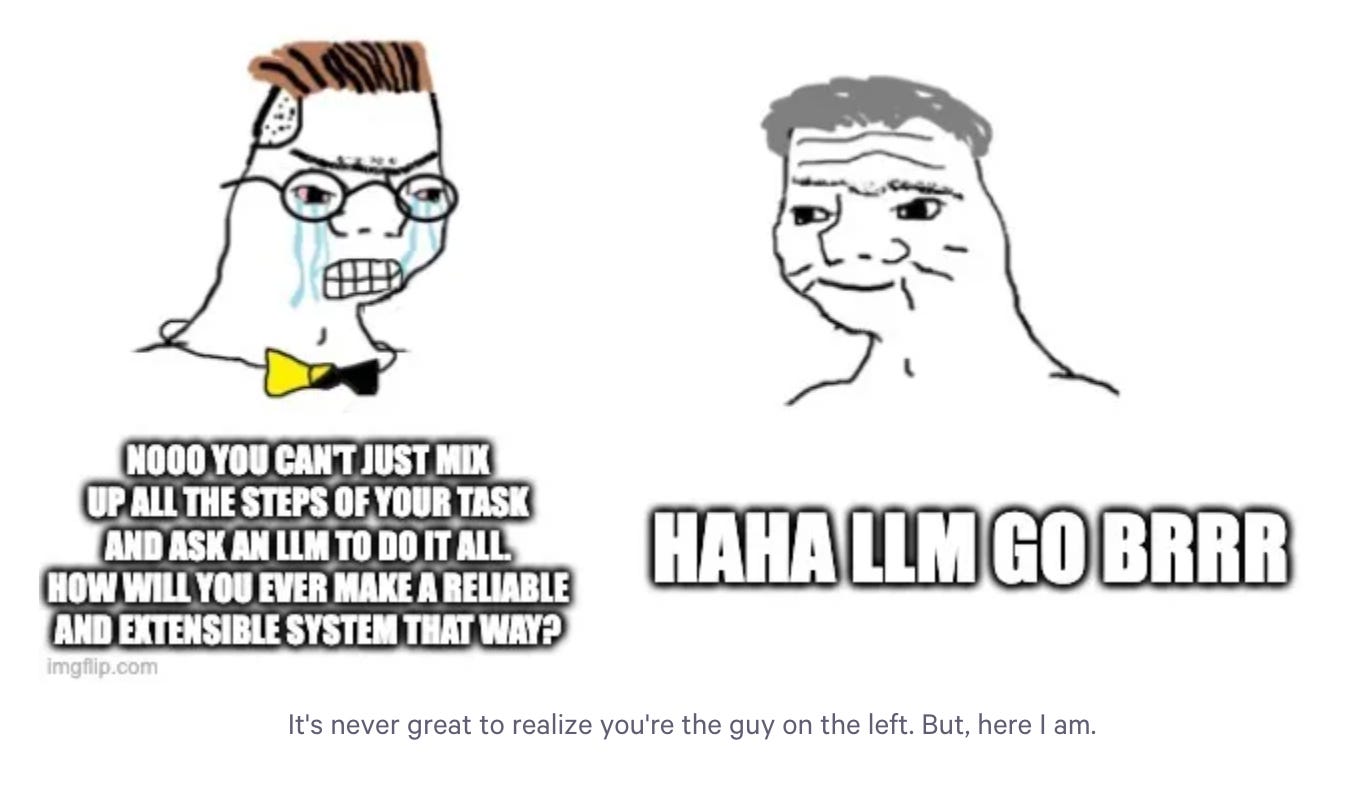

✍️ Against LLM maximalism

Matt Honnibal from Explosion, the makers of spacy, wrote a very interesting take on the role of Large Language Models (LLMs) in most practical NLP use cases. He cautions against a view that "a prompt is all you need" nowadays and against replacing all steps of an NLP problem with an LLM with the right prompt, what he calls the LLM maximalism approach.

He highlights two main problems with LLM maximalism approach

Meeting speed and performance requirements might not be possible or very hard

The approach is not modular which makes it harder to debug and extend

His "NLP pragmatism" approach calls for LLMs to be used for prototyping and accelerating data collection 🚀

🔗 Here is the full blog https://explosion.ai/blog/against-llm-maximalism.