Imitating LLMs, Argilla feedback, GPT function calling and more

Welcome to episode 4 of The Token, your trusted guide to the latest advancements in the field of Natural Language Processing (NLP). In this edition, we delve into the world of imitating proprietary Large Language Models (LLMs) using open source equivalents. We'll introduce Argilla Feedback, a fresh open source tool for gathering preference and instruction data, to aid in the tuning of LLMs. Plus, we'll take a look at OpenAI's new API addition: function calling, which which opens up a lot of use cases that require external tools 🔨

As always, we’d love to hear your thoughts, and should you need help with any NLP challenge, feel free to reach out at hi@mantisnlp.com.

🧪 The (not so) false promise of imitating proprietary LLMs

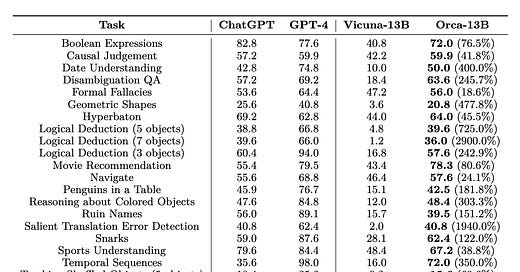

A new research paper titled "the false promise of imitating proprietrary LLMs" claimed that training smaller Large Language Models (LLMs) on data generated from proprietary LLMs, a form of distillation, can produce deceivingly similar outputs, but on closer investigation does not close the performance gap of those models in most tasks.

A few days later this was called into a question in a new paper that used chain of thought explanations from ChatGPT and GPT4 to close the performance gap in academic tests and hard reasoning benchmarks like Big-Bench. To do that the authors used 5M prompts from the Flan dataset that were annotated with ChatGPT and GPT4 using chain of thought explanations.

Despite the above, which has been getting the most coverage, we believe whats most relevant to the industry is that both papers demonstrate that you can successfully close the gap in performance between proprietary and open source models on specific tasks by using synthetic data generated by proprietary models.

🔗 Read more here https://arxiv.org/pdf/2305.15717.pdf and https://arxiv.org/pdf/2306.02707.pdf

🖱Argilla Feedback

You can now use Argilla, an open source annotation tool we like to use here at Mantis, to collect preference data from humans to steer an LLM using reinforcement learning from human feedback (RLHF)

Collecting preference data and correcting the model responses is one way to steer an LLM from undesired behavior as well as reward more helpful answers for your use case. 🚀

You can also use Argilla to construct instruction datasets to tune an LLM to follow instructions relevant to your company or product, a step before RLHF.

🔗 Read more about this new release from Argilla here https://argilla.io/blog/argilla-for-llms/

🖱OpenAI function calling

OpenAI now supports ‘function calling’ directly from the API instead of passing function definitions via your prompt. The main benefit is that the latest models have been fine tuned to work with functions which should make them much more reliable in using tools 🔨

Tools and functions allow you to connect your LLM with other parts of your application like databases as well as external APIs. It also enables your LLM to handle numbers, dates and other numerical information better.

🔗 Read more https://openai.com/blog/function-calling-and-other-api-updates?ref=upstract.com

🧪 Direct preference optimization

You can now align Large Language Models from human feedback directly without reinforcement learning.

Up until now the most common way to steer LLMs towards the direction that users found most useful was through a process called reinforcement learning from human feedback (RLHF). This is a relative complex step which also tends to be a bit unstable in practice.

As it turns out, the problem can be reformulated into one that optimizes directly on top of user preference data. This new approach is simpler, enjoys some theoretical guarantees and seems more stable in early experiments 🚀

This makes it even easier to tune an LLM to your users’ data and reduces the gap even further between the performance that can be obtained from open source models and closed source state of the art LLMs.

🔗 Read more in the paper https://arxiv.org/pdf/2305.18290.pdf

🖱Apple vision 🥽

Apple announced Vision Pro earlier this week, which is their take on the AR/VR space. Interestingly the device is controlled using your eyes 👁, voice 🗣 and hands 🤏

We believe that voice 🗣 will play an important role as an input medium over the next years and we have already been helping clients incorporate voice control mechanisms into their products, powered by

Voice control typically consists of two steps, a ‘speech to text’ part and a ‘text to action part’. Speech to text is a relatively solved problem, but can still be improved with your domain data for even better performance. Text to action is the more challenging part, since it requires you to anticipate the possible actions a user might want to do, which in a natural conversation medium may be different from what your product exposes through it's User Interface 🖱️