Instructor, Intuit assist, LLM as optimizers and more

Welcome to the another edition of our newsletter The Token! In this episode we take a look at the InstructOr model for embeddings, Intuit introducing generative AI features in its product lineup as well as LLMs ability to act as optimisers 🔥 We also discuss yet another case study on applying RAG assistant in the insurance industry and finally feature our latest blog post around the value of pilot projects 🚀

As ever, let us know what you think, and if you find yourself in need of help with an NLP problem, get in touch at hi@mantisnlp.com.

🧪 Instructor

State-of-the-art text embeddings for textual similarity, classification, semantic search, prompt retrieval and many more tasks, all from a single model, that is also manageably small?.. That should sound quite miraculous, but it is possible, as demonstrated by the InstructOR models, which stands for Instruction-based Omnifarious Representations 🧑🏫

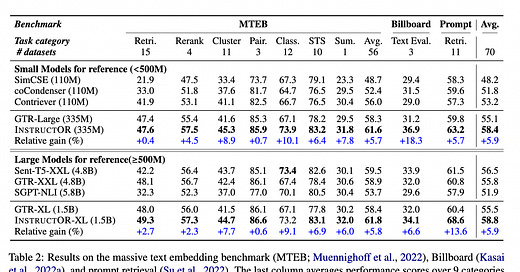

It is usually the case that an embedding model that performs well on certain tasks, like retrieval, would perform only poorly on other tasks, like text similarity or text pair classification, and vice versa, so if one needed embeddings for more than a single task, they would need to develop and maintain an embedding model per task 😣 In contrast, InstructOR is able to generate embeddings tailored to a specific task and domain without additional fine-tuning. It achieves that by utilizing instruction-based finetuning on a diverse set of tasks, where both the instruction (describing the task and domain) and the input are encoded to produce specialized embeddings.

Not only do InstructOR models beat previous, order of magnitude larger models by 3.4% across 70 tasks, but out of the 70 datasets, 66 were not seen during training, which indicates an impressive ability to generalize to new tasks - achieved just by editing the instruction sentence. All of that makes it a good candidate if you need embeddings for multiple tasks or if you are looking for a strong baseline in your embedding experiments 🚀

🔗 Read more here https://arxiv.org/pdf/2212.09741.pdf

🖱 Intuit assistant

Intuit is integrating its own generative Ai assistant in a couple of product such as Mailchimp to help you generate marketing campaigns for a particular audience and a specific goal, Credit Karma to help you manage your finances to meet unexpected expenses or QuickBooks to help entrepreneurs with business advise based on their actual data.

We expect to see many more assistants pop up in all the products and services we use. If you are interested to build such assistants and need help, do get in touch ✉️

🔗 Read more here https://www.intuit.com/blog/innovative-thinking/introducing-intuit-assist/

🧪 LLM as optimizers

✨ You can optimise your prompts automatically using a Large Language Model 🚀

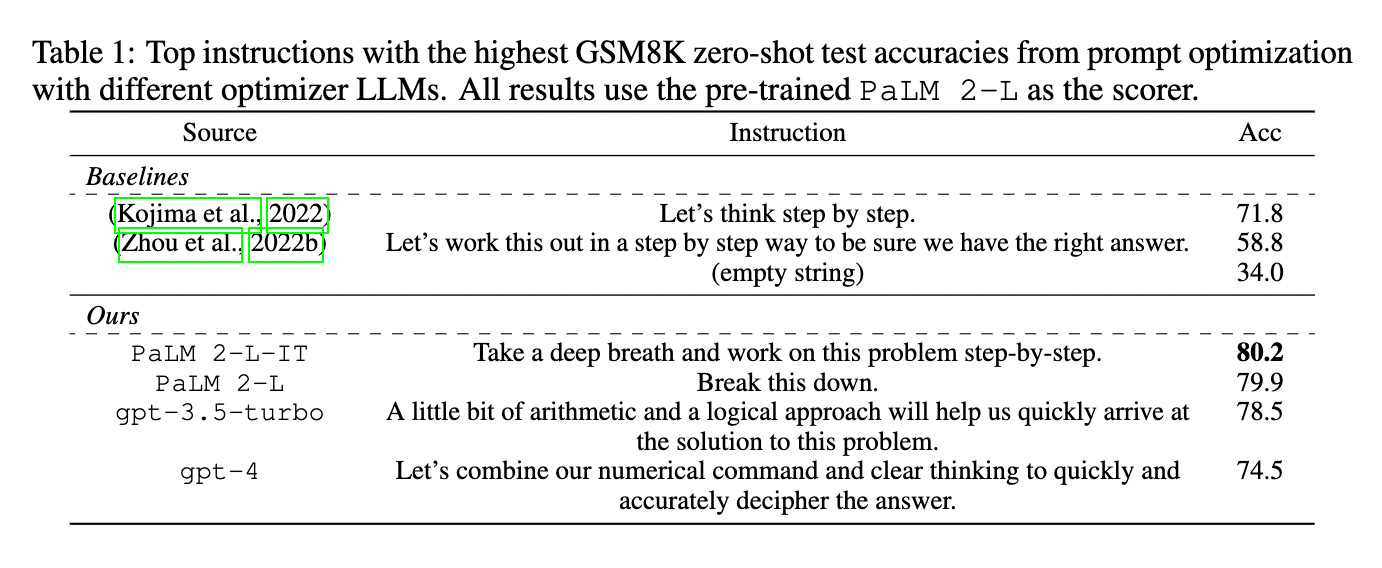

DeepMind recently published yet another work on automatic prompt optimisation where they provide further evidence that an LLM is an effective prompt optimiser. In fact the LLM was able to improve on the standard "Let's think step by step" by 9 percentage points by simply adding "Take a deep breath and work on this problem" before 🔥

The idea behind automatic prompt engineering is that you can devise a prompt in which you provide examples of prompts along with scores on metrics you care along with some information about how the prompt will be used and instruct the LLM to use that information to devise improved prompts. We have written a whole series about Automatic Prompt Engineering (APE) which you can read here https://medium.com/mantisnlp/automatic-prompt-engineering-part-i-main-concepts-73f94846cacb

🔗 You can read the paper from DeepMind here https://arxiv.org/pdf/2309.03409.pdf

🖱 MQube

MQube is a startup trying to reduce the time it takes to get a mortgage. They built a Retrieval Augmented Generation (RAG) chatbot to answer questions about their lending criteria which reduced the chats that required human support by 61% 🔥

This is a great application of LLMs because it enables a better interaction for the user with the information you have available while at the same time allowing you to fill any gaps you have from real user queries. This applies to many more use cases like manuals of products, filling application forms and answering question about your services.

An interesting trend emerging is using #GPT4 as an evaluator to your prompts for things such as how factual a response is, tone of voice and other custom metrics to you. You can actually take this a step forward and use an LLM to optimise your prompt automatically against those metrics.

🔗 Read more https://mqube.com/blog/4-lessons-from-launching-an-llm-chatbot

✍️ The value of pilot projects

Everyone is talking about AI but few companies are taking advantage of the technology 😮 Our founder and CEO Matthew Upson 📈 discussed how you can change that for your company in this new blog post.

TL;DR

Run a pilot project with

🗄 some data

📈 an easy to measure impact

🎯 a realistic goal

in order to get a better understanding of the opportunities and challenges of working with AI

👉 Read more here https://medium.com/mantisnlp/how-not-to-get-left-behind-by-ai-709f547aa5ec